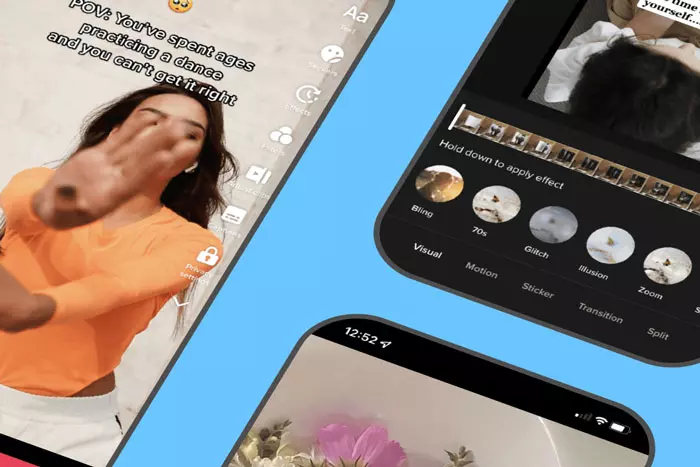

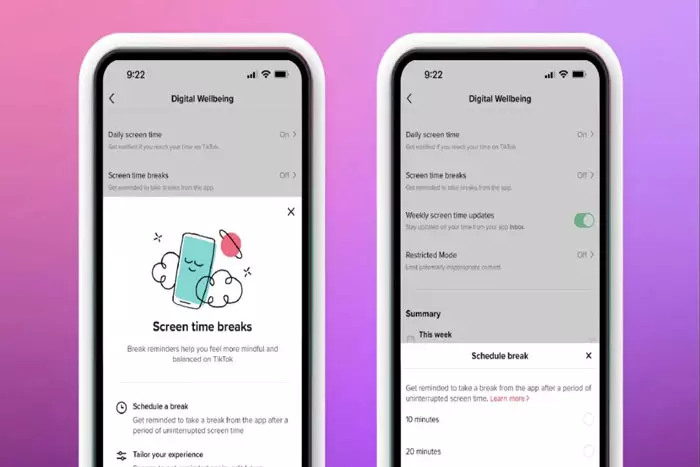

TikTok is launching Hashtag filters and new Safety tools to enable more ways to restrict undesired exposure within the program, in response to several probes into how it safeguards younger users.

TikTok added “content control” features and hashtag filters to its FY (For You) and Following feed to protect youngsters.

“Information Levels” is the name of the soon-to-be-launched first edition of the company’s new system that restricts certain sorts of content from being viewed by adolescents.

TikTok is expanding its restrictions on potentially dangerous content, including adult content, diets, extreme fitness, and depression, among others.

TikTok began a new round of studies in December 2018 to determine how it might be able to mitigate the possible negative effects of algorithm amplification by restricting the number of videos in sensitive categories that are highlighted in users’ “For You” feeds.

TikTok is Launching Hashtag Filters and New Safety Tools

According to TikTok:

“As a consequence of our tests, we have improved the watching experience by displaying fewer videos concerning these themes at once. We are still revising this work due to its complexities. For example, some forms of content, such as disordered eating recovery information, may have both positive and negative elements”.

This is a fascinating area of study that aims to prevent people from falling down rabbit holes of internet knowledge and becoming infatuated with potentially hazardous content. This could have a favourable effect on user behaviour by limiting the amount of content on a certain topic that can be viewed at one time.

TikTok prohibits explicit content but allows “mature/complicated issues that may depict past stories or real-world events” With the help of Content Levels, such material will be categorized and given a maturity rating, making it inaccessible to users between the ages of 13 and 17.

TikTok says:

“We’ll release an early version in the next few weeks to keep audiences between the ages of 13 and 17 from seeing content with extremely mature themes. When a video contains mature or sophisticated issues, such as fictional scenarios that may be too scary or dramatic for young viewers, a maturity score is applied to prevent anyone under 18 from accessing it”.

Trust and Safety administrators will initially assign scores to more popular videos or those reported by app users. The technology will eventually be expanded to offer filtering options to everyone in the community, not just teenagers.

Ultimately, the system will allow producers to categorize their material, similar to how movies, television shows, and video games utilize age ratings.

TikTok will soon offer hashtag filters to allow consumers more control over FY (For You) and Following content. The software will filter out certain phrases or hashtags from users’ feeds. Certain phrases or hashtags can be blocked by users from appearing in their feeds, and the app will do the filtering for them.

The functionality is designed to go beyond merely screening out objectionable or mature content; it may also be used to prevent TikTok’s algorithm from surfacing themes that users are sick of seeing or simply don’t care about. Use it to prevent dairy or meat recipes if you’re turning vegan, or to stop seeing home improvement tutorials once you’ve completed them.

When you activate a clip, you can now ban specific hashtags using the ‘Details’ page. So, if you don’t want to watch any more videos labelled with # ice cream, for whatever reason (strange example, TikTok users), you can now declare this in your preferences, and you can also filter content containing selected key terms in the description.

This is not ideal because the technology does not detect the actual content, only what individuals have manually entered into their description notes. So, if you have a phobia of ice cream, there is still a potential that you may be exposed to unsettling imagery on the app, but it provides you with a new opportunity to control your experience.

- In the next few weeks, TikTok stated, the feature will be accessible to all of its users.

The new content moderation measures are in response to a 2021 congressional investigation into social applications such as TikTok over the possibility that their algorithmic recommendations promote eating disorder content to younger users. More recently, parents whose kids allegedly tried dangerous acts they saw on the app and perished as a result have sued TikTok.

What type of entity recognition does TikTok employ, what can its Algorithms genuinely identify in videos, and based on what criteria?

TikTok’s system is pretty advanced, which is why its Algorithm is so successful at keeping people scrolling. It can detect the content bits you are most likely to engage with depending on your past activity.

The more items TikTok can identify, the more signals it has to match you with clips, and it does appear that TikTok’s Algorithm is becoming increasingly adept at identifying more features in uploaded movies.

As previously stated, the modifications come as TikTok continues to face attention in Europe for its failure to prevent underage users’ exposure to inappropriate content.

A month ago, TikTok vowed to amend its standards around branded material after an EU probe determined that it “failed in its responsibility” to safeguard youngsters from inappropriate and concealed advertising. On a separate front, reports indicate that many children have suffered terrible injuries, with some even passing away, while participating in the app’s perilous activities.

It will be interesting to watch if these new tools persuade regulatory groups that TikTok is doing everything it can to keep its youthful audience secure in additional ways.

Though we have my doubts. Short-form video demands attention-grabbing gimmicks and stunts, therefore shocking, surprising, and provocative content performs generally better in this setting.

As a result, TikTok’s approach, at least in part, encourages producers to continue sharing potentially dangerous videos with the expectation that they would go viral in the app.

I work at Likes Geek as a marketing researcher and journalist with over 5 years of experience in media and content marketing. With a demonstrated history of working in the international news and financial technology publishing industries. I manage content and the editorial team at Likes Geek.